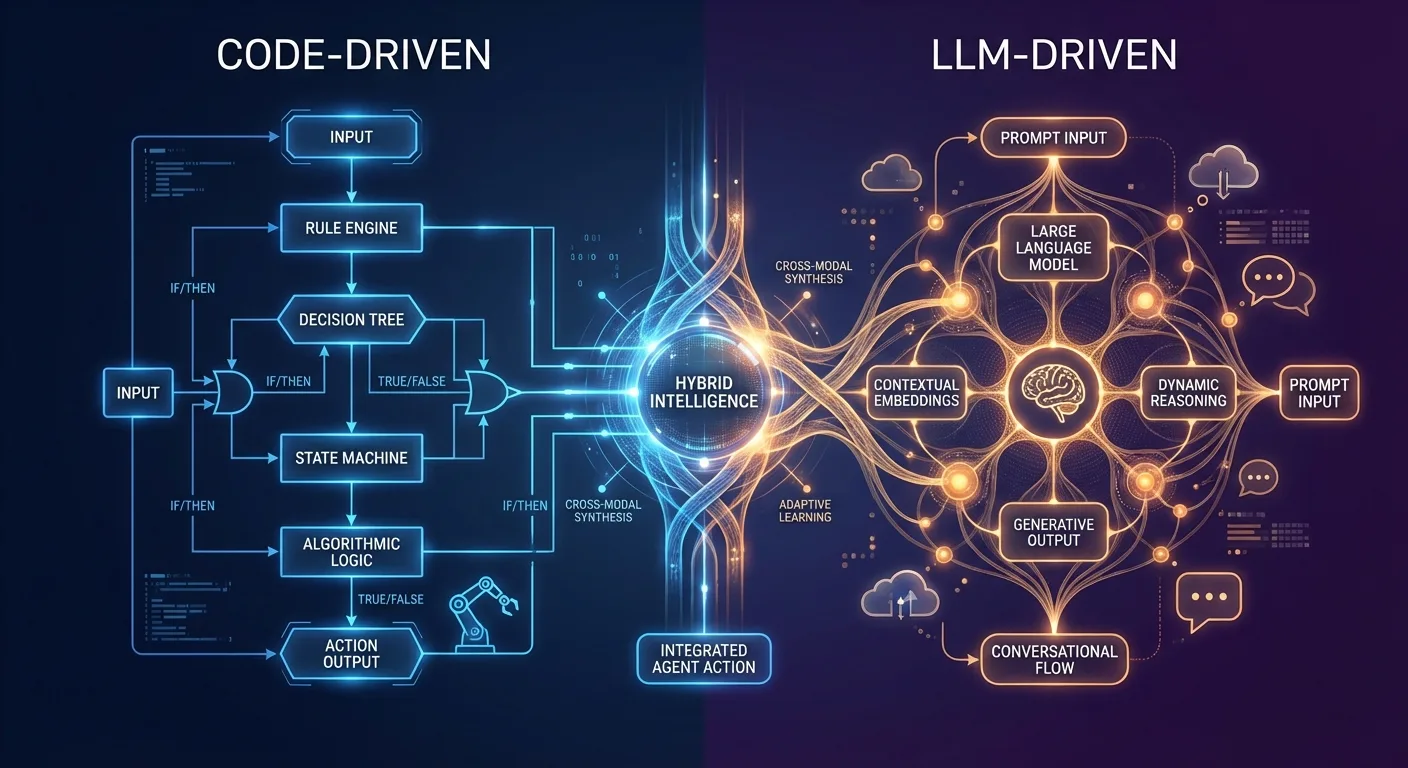

What Are Code-Driven AI Agents?

Code-driven agents follow deterministic workflows. Think of them as state machines with predefined paths, decision trees, and explicit logic branches.

Strengths of Code-Driven Agents:

- Predictability: Same input always produces the same output

- Debugging: Stack traces and logs are straightforward

- Cost-Efficient: No LLM inference needed for every decision

- Compliance-Friendly: Easier to audit for regulated industries (healthcare, finance)

- Low Latency: No API call delays for simple decisions

Weaknesses:

- Brittle: Struggles with unexpected user inputs or novel scenarios

- Maintenance Overhead: Every edge case requires new code

- Limited Creativity: Can't adapt to user phrasing variations without explicit rules

- Scalability Challenges: Handling 100+ user intents becomes unwieldy

What Are LLM-Driven AI Agents?

LLM-driven agents use large language models as the decision-making engine. Instead of hardcoded logic, the agent sends context to an LLM and uses its response to determine next actions.

Strengths of LLM-Driven Agents:

- Flexibility: Handles unexpected inputs gracefully

- Natural Conversations: Understands nuance, context, and user intent

- Rapid Iteration: Change behavior by tweaking prompts, not rewriting code

- Contextual Reasoning: Can make decisions based on conversation history and page state

- Creative Problem-Solving: Suggests solutions you didn't explicitly program

Weaknesses:

- Unpredictability: Same input might produce different outputs

- Debugging Nightmares: "Why did the agent say that?" becomes hard to answer

- Cost: Every decision = API call to OpenAI/Anthropic ($$$)

- Latency: Network round-trips add 200-500ms per decision

- Compliance Risks: Harder to guarantee regulatory adherence

The Demogod Approach: Hybrid Architecture

At Demogod, we don't force an either/or choice. Our voice AI demo agents use a hybrid architecture that combines the best of both worlds:

1. Code-Driven Orchestration Layer

The outer control loop is deterministic: connection handling, authentication, security checks, DOM traversal, and fallback logic.

2. LLM-Driven Conversational Layer

The LLM handles natural language understanding: user intent classification, contextual responses, dynamic product recommendations, and handling ambiguous queries.

3. Knowledge Retrieval Layer

Vector databases and structured data for product documentation, FAQ responses, and user profile data.

When to Use Each Approach

Use Code-Driven Agents When:

- High-Stakes Operations: Financial transactions, medical advice, legal compliance

- Predictable User Flows: Onboarding wizards, form submissions, checkout processes

- Cost Sensitivity: Need to minimize API costs at scale

- Latency Requirements: Sub-100ms response times required

- Auditability Matters: Need to explain every decision to regulators

Use LLM-Driven Agents When:

- Open-Ended Conversations: Sales demos, customer support, product discovery

- Contextual Understanding: User intent varies widely

- Rapid Iteration: Launching MVP, testing product-market fit

- Personalization: Tailoring responses to user history/preferences

- Complex Reasoning: Multi-step decision trees that would explode in code

Use Hybrid Agents When:

Always. Seriously. Pure code-driven agents feel robotic. Pure LLM-driven agents are unpredictable. The winning formula is:

Code for structure. LLM for flexibility.

Real-World Hybrid Agent: Demogod Demo Assistant

Here's how Demogod's voice AI demo agent works in practice during a SaaS product demo:

- User lands on demo page (code-driven: detect page load, initialize WebRTC)

- Agent greets user with natural welcome (LLM-generated based on context)

- User requests feature demo (LLM classifies intent)

- Agent navigates to feature (code-driven: DOM traversal)

- Agent explains feature naturally (LLM generates contextual explanation)

- Agent suggests logical next steps (LLM-driven personalization)

This hybrid approach gives us natural conversation + reliable execution.

Implementation Tips for Hybrid Agents

1. Define Clear Action Boundaries

Your LLM should output structured action requests, not raw code. Your code layer validates and executes safely.

2. Use Prompt Engineering for Consistency

Give your LLM explicit output formats to ensure predictable structured responses.

3. Implement Fallback Chains

LLM decision fails → Rule-based fallback → Human handoff

4. Monitor LLM Decision Quality

Track metrics: intent classification accuracy, user frustration signals, conversation abandonment rate, and cost per conversation.

5. Cache LLM Responses for Common Patterns

If 80% of users ask the same 20 questions, cache those responses to reduce API costs.

The Future: Agentic Workflows

The next evolution is agentic workflows where multiple specialized agents collaborate:

- Router Agent (LLM-driven): Decides which specialist to invoke

- Navigation Agent (code-driven): Handles page traversal

- Explanation Agent (LLM-driven): Generates natural language responses

- Security Agent (code-driven): Enforces access control

This multi-agent architecture is how Demogod will scale to handle complex enterprise workflows while maintaining reliability.

Conclusion: Choose Your Architecture Wisely

The code-driven vs. LLM-driven debate isn't about picking sides. It's about understanding the strengths and tradeoffs of each approach and combining them intelligently.

At Demogod, we believe the future of AI agents is hybrid by default:

- Code-driven for reliability, speed, and compliance

- LLM-driven for flexibility, personalization, and natural conversation

The best AI agents don't force users to adapt to rigid workflows. They blend structured reliability with conversational intelligence.

That's the Demogod difference.

Try Demogod's Voice AI Demo Agent: Visit demogod.me/demo and experience a voice-controlled product demo that combines code-driven reliability with LLM-powered natural conversation.

One line of integration. Unlimited conversational demos.

DEMOGOD

DEMOGOD